There Not Here Installation

San Francisco — 2018

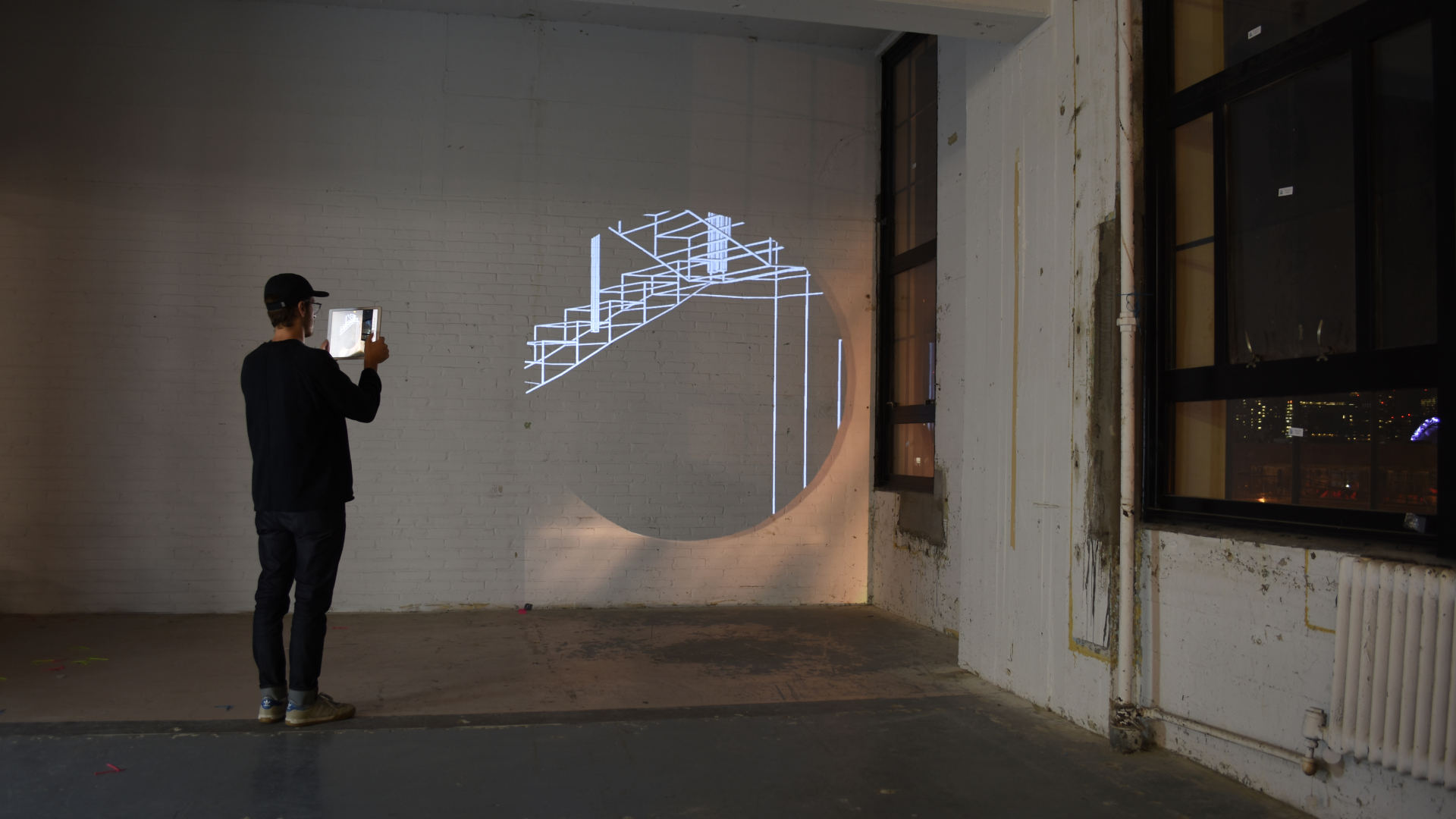

Augmented reality lets us to blend new worlds with our own. But aside from surface planes and shadows, our physical surroundings are mostly foreign to the experience. Shot and rendered in real-time, the following experiments explore how light, sound and motion from outside of our devices can create multi-dimensional AR experiences that challenge our perspective of "here" and "there."

— AR, interactive, science

Module 01

Light From Non-Objects

Light as an indicator

By definition light is a natural agent that stimulates sight and makes things visible. Synchronizing DMX controllable lights to AR objects, we can observe the presence, speed or proximity of virtual objects on our physical surroundings.

Impossible Projection

With AR, impossible objects can exist among us. In this example, the projected shadow of an AR Tesseract illuminates the ground as a 3D cube.

Module 02

The dichotomy of sound

Sound from non-objects

It's easy to recognize an environmental sound from a speaker. Here, sound created from a drum beater and synchronized to digital objects creates spatial audio cues of virtual objects hitting a physical surface.

Non-objects from sound

In this experiment, we used MIDI to visualize the pressed keys of a piano in real-time. Enlarging those objects at room, or venue scale allows a user view the keys up-close and from their own perspective.

Module 03

Coordinated Motion

Non-object inertia

Game engines have replicated our scientific laws to create realistic simulations of our physical world. In a modern twist on the classic "Newton's Cradle" we tested the boundaries of Newton's first law to see how closely we could translate movement these two worlds.

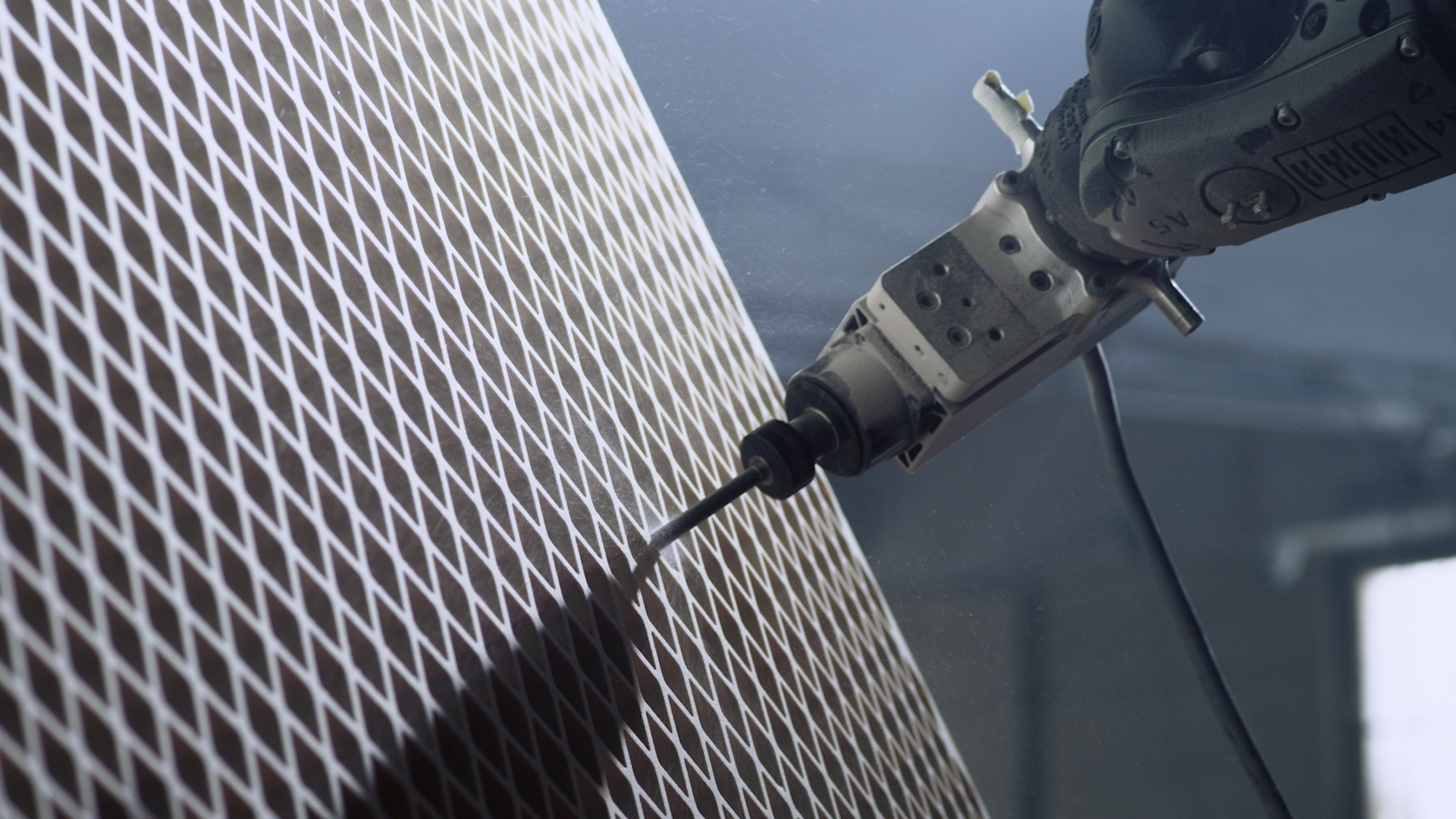

Precision calibration

Robots have the unique ability to "touch" virtual objects based on their 3D spatial awareness. To test the boundaries of this interaction, we built a Unity based real-time controller for our robot arm creating a precise connection between virtual and physical objects in motion.

SCIENCE.AF

Credits

*Work produced at Novel

ECD: Jeff Linnell

Design Team: Jeremy Stewart, Brandon Kruysman, Jonathan Proto

Executive Producer: Julia Gottlieb

Special Thanks - Mimic team at Autodesk: Evan Atherton, Nick Cote, Jason Cuenco

Additional Robotics Support: Eli Reekmans

Video Production: Stebs, Natalie Rhold

Fabrication: Dakota Wilder, Andrew Devansky